Reading post (04/27/25)

Deep-dive into Linkedin's 360Brew paper, How AI enhanced CCTA Outshines Traditional Proxies in Predicting Coronary Artery Disease, and US electricity capacity could be the biggest hurdle for AI

360Brew : A Decoder-only Foundation Model for Personalized Recommendations (paper)

Introduction

Personalized recommendation systems are crucial for enhancing user experience across various online platforms. Traditionally, these systems have employed a multitude of task-specific models, often requiring significant development and maintenance efforts. e.g. tasks specific models for some of the common events at linked (e.g., jobs, posts, connections), leads to redundancy in development and potential inefficiencies

360Brew tackles this by proposing a single foundation model that can be adapted to different tasks without requiring task-specific fine-tuning.

Model Architecture:

360Brew adopts a decoder-only transformer architecture. This choice aligns with the growing trend of using transformer models for sequential data processing and leverages the strengths of the decoder architecture in generative and conditional tasks.

It takes a departure from the conventional approach where text and content understanding models serve merely as feature generators for traditional ID-based recommendation systems. Instead, the authors propose a paradigm shift by directly employing a Large Language Model (LLM) with a natural language interface as the core recommendation engine.

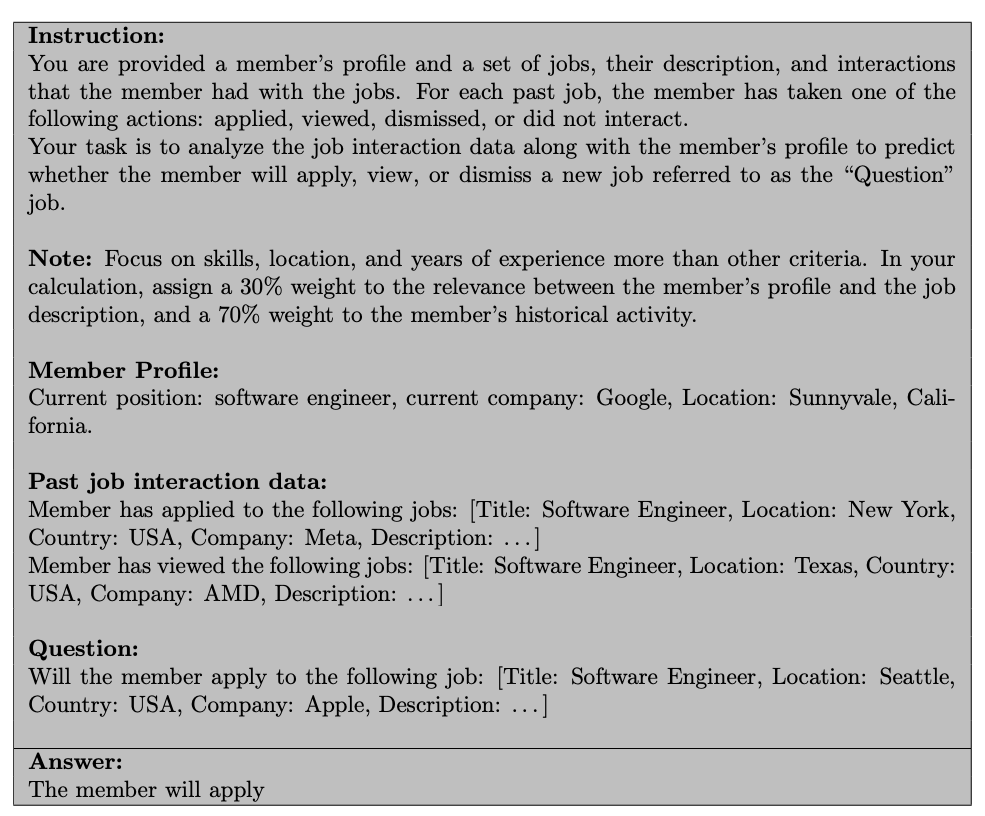

The paper argues that in any recommendation task, a member's unique profile and interaction history can be viewed as a 'many-shot problem'. By conditioning the LLM-based ranking model on this rich context, the model can identify highly personalized patterns and extrapolate these patterns to predict future interactions. This setup allows for the reformulation of the recommendation problem as in-context learning, where the foundation model learns to approximate the joint distribution of members, interactions, and tasks. This enables the estimation of various marginal probabilities for specific recommendation tasks, given a 'Task Instruction', the member's profile, their interaction history, and the new items to be considered.

The authors draw a parallel between this approach and the next-token prediction objective used in training decoder-only LLMs. This similarity allows them to adapt such an architecture for their foundation ranking model, named 360Brew. They provide an illustrative example of a prompt that combines the member's profile, past job interaction data (applications, views, dismissals), and a 'Question' job, along with specific instructions on how to weigh different factors (like skills, location, experience, and past activity) to predict the member's future action (apply, view, or dismiss).

Results:

The 360Brew V1.0 model, built upon the Mixtral 8x22 architecture and trained on a vast amount of LinkedIn data across numerous surfaces, was evaluated on over 30 tasks, including both in-domain and out-of-domain scenarios. Results indicated that scaling the training data led to significant performance improvements compared to established baseline models, which required years of development. Furthermore, increasing the model size and the context length (history) also positively impacted performance. Notably, 360Brew demonstrated strong generalization capabilities by achieving comparable or better performance than production models on out-of-domain tasks, exhibiting a larger performance margin in cold-start scenarios (users with fewer interactions), and showing more resilience to temporal distribution shifts, potentially reducing the need for frequent model updates

Commentary:

The concept of a large LLM type unified foundation model with textual interface for personalized recommendation across diverse tasks is an interesting direction. The success reported by the LinkedIn team in achieving performance parity or even improvements over existing specialized systems without task-specific fine-tuning is definitely interesting.

However, several questions arise in my mind while reading the paper. The search results do not delve into the specific details of the pre-training data and objectives used to create this foundation model. Understanding how the model is trained to learn such generalizable representations would be crucial. The ID-based user and item side features encode richer representation of users/items and have been instrumental in scaling billion user scale recommendation systems. There could be an opportunity to utilize textual interface along with sequence learning from ID based events features together to create generalized and performant recommendation systems

How AI enhanced CCTA Outshines Traditional Proxies in Predicting Coronary Artery Disease

I came across this note by Dr. Ronald Karlsberg proposing a targeted approach to eradicate coronary artery disease (CAD) by targeting atherosclerotic plaque directly, using AI-enhanced coronary CT angiography (CCTA) to assess plaque type and progression, which predicts heart attack risk more accurately than traditional risk factors like cholesterol levels.For decades, efforts focus on risk factors—high LDL, smoking, or diabetes—as proxies for CAD. These are critical proxies that have correlation with CAD, but they often sidestep the core issue: atherosclerotic plaque buildup in the arteries, which is what eventually leads to CAD.

Personalization is key to advancing the fight against coronary artery disease (CAD). Genetic testing, like polygenic risk scores, can pinpoint individuals with an inherited CAD risk, which may account for up to 50% of their overall risk. When combined with AI-powered imaging, this approach clarifies who needs intervention and who doesn’t, even if they have traditional risk factors.

Karlsberg advocates for a personalized, multi-pronged strategy—combining lifestyle changes, advanced imaging, genetics, and new therapies like photon-counting CT—to transform CAD from a leading killer into a preventable condition, mirroring the precision seen in modern cancer care. The main question now is how accessible it is. Serial CCTA, which is widely accessible in the US, might cost $1,500–$4,500 over three years, while PCCT could range from $1,000–$2,500 per scan, potentially totaling $3,000–$7,500 for serial use. AI analysis and consultations add to these costs. Like everything, insurance coverage might be limited to when this is deemed “medically necessary” [1, 2, 3]

US electricity capacity could be the biggest hurdle for scaling AI

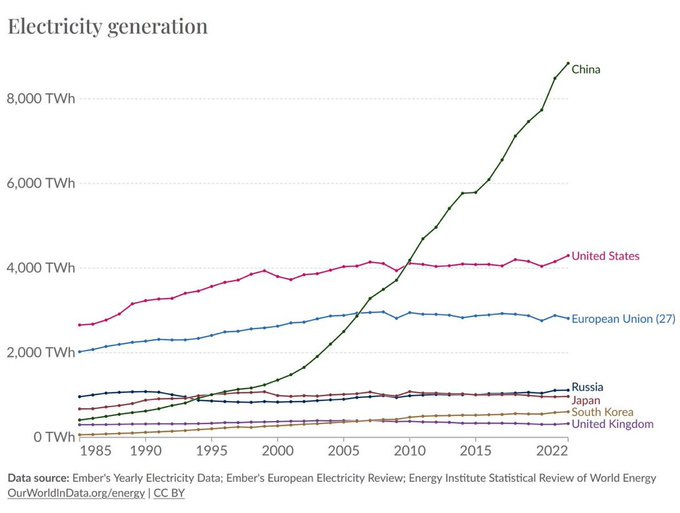

The US has been struggling to add new electricity generation capacity. In the last 25 years, the capacity in the US has been flat whereas China as grown 6X (chart below). This disparity in growth raises questions about the US's ability to meet future energy demands, especially for supporting and maintaining the lead in AI

One of the factors contributing to slower capacity growth in the US is the complex and often lengthy regulatory process involved in establishing new power plants, particularly for base-load sources like nuclear. The environmental permitting, safety reviews, and public consultations can significantly extend the timeline and increase the cost of bringing new generation online. Renewable energy generation is also falling behind to due supply chain dependencies. About 28% of renewable energy projected expected to land in 2024 have been delayed or canceled, representing 42GW capacity. Even though there are legislative efforts, like Trump administrations effort to fast permit local power plans next to the data-centers, the regulatory hurdles remain substantial.

In contrast, China has been aggressively scaling up its nuclear power production capacity. Over the past decade, China added more than 34 gigawatts of nuclear power capacity, bringing its operational reactor count to 55 with a total net capacity of 53.2 GW as of April 2024. An additional 23 reactors are currently under construction, expected to add another 23.7 GW in the coming years. This rapid expansion underscores China's commitment to diversifying its energy mix and reducing reliance on fossil fuels, and their streamlined approach to nuclear development contrasts with the more protracted processes in the US.